This article was co-authored by Andrew Ansley.

Things, not strings. If you haven’t heard this earlier than, it comes from a famous Google blog post that introduced the Knowledge Graph.

The announcement’s eleventh anniversary is barely a month away, but many nonetheless wrestle to know what “issues, not strings” actually means for web optimization.

The quote is an try and convey that Google understands issues and is not a easy key phrase detection algorithm.

In May 2012, one might argue that entity web optimization was born. Google’s machine studying, aided by semi-structured and structured data bases, might perceive the which means behind a key phrase.

The ambiguous nature of language lastly had a long-term resolution.

So if entities have been vital for Google for over a decade, why are SEOs nonetheless confused about entities?

Good query. I see 4 causes:

- Entity web optimization as a time period has not been used extensively sufficient for SEOs to change into snug with its definition and due to this fact incorporate it into their vocabulary.

- Optimizing for entities tremendously overlaps with the outdated keyword-focused optimization strategies. As a outcome, entities get conflated with key phrases. On prime of this, it was not clear how entities performed a job in web optimization, and the phrase “entities” is usually interchangeable with “subjects” when Google speaks on the topic.

- Understanding entities is a boring activity. If you need deep data of entities, you’ll must learn some Google patents and know the fundamentals of machine studying. Entity web optimization is a much more scientific strategy to web optimization – and science simply isn’t for everybody.

- While YouTube has massively impacted data distribution, it has flattened the educational expertise for a lot of topics. The creators with essentially the most success on the platform have traditionally taken the simple route when educating their viewers. As a outcome, content material creators haven’t spent a lot time on entities till lately. Because of this, you’ll want to find out about entities from NLP researchers, after which you’ll want to apply the data to web optimization. Patents and analysis papers are key. Once once more, this reinforces the primary level above.

This article is an answer to all 4 issues which have prevented SEOs from totally mastering an entity-based strategy to web optimization.

By studying this, you’ll be taught:

- What an entity is and why it’s vital.

- The historical past of semantic search.

- How to determine and use entities within the SERP.

- How to make use of entities to rank net content material.

Why are entities vital?

Entity web optimization is the way forward for the place engines like google are headed with regard to picking what content material to rank and figuring out its which means.

Combine this with knowledge-based belief, and I consider that entity web optimization would be the way forward for how web optimization is finished within the subsequent two years.

Examples of entities

So how do you acknowledge an entity?

The SERP has a number of examples of entities that you just’ve possible seen.

The commonest varieties of entities are associated to places, folks, or companies.

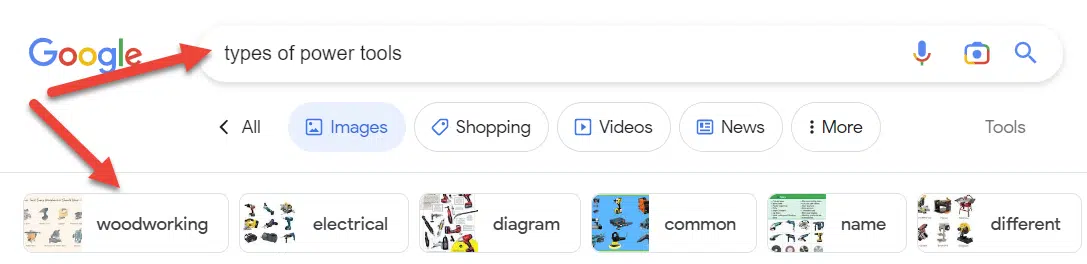

Perhaps the perfect instance of entities within the SERP is intent clusters. The extra a subject is known, the extra these search options emerge.

Interestingly sufficient, a single web optimization marketing campaign can alter the face of the SERP when you understand how to execute entity-focused web optimization campaigns.

Wikipedia entries are one other instance of entities. Wikipedia supplies an important instance of data related to entities.

As you may see from the highest left, the entity has all types of attributes related to “fish,” starting from its anatomy to its significance to people.

While Wikipedia incorporates many information factors on a subject, it’s on no account exhaustive.

What is an entity?

An entity is a uniquely identifiable object or factor characterised by its title(s), sort(s), attributes, and relationships to different entities. An entity is barely thought of to exist when it exists in an entity catalog.

Entity catalogs assign a singular ID to every entity. My company has programmatic options that use the distinctive ID related to every entity (providers, merchandise, and types are all included).

If a phrase or phrase isn’t inside an present catalog, it doesn’t imply that the phrase or phrase isn’t an entity, however you may usually know whether or not one thing is an entity by its existence within the catalog.

It is vital to notice that Wikipedia isn’t the deciding issue on whether or not one thing is an entity, however the firm is most well-known for its database of entities.

Any catalog can be utilized when speaking about entities. Typically, an entity is an individual, place, or factor, however concepts and ideas will also be included.

Some examples of entity catalogs embody:

- Wikipedia

- Wikidata

- DBpedia

- Freebase

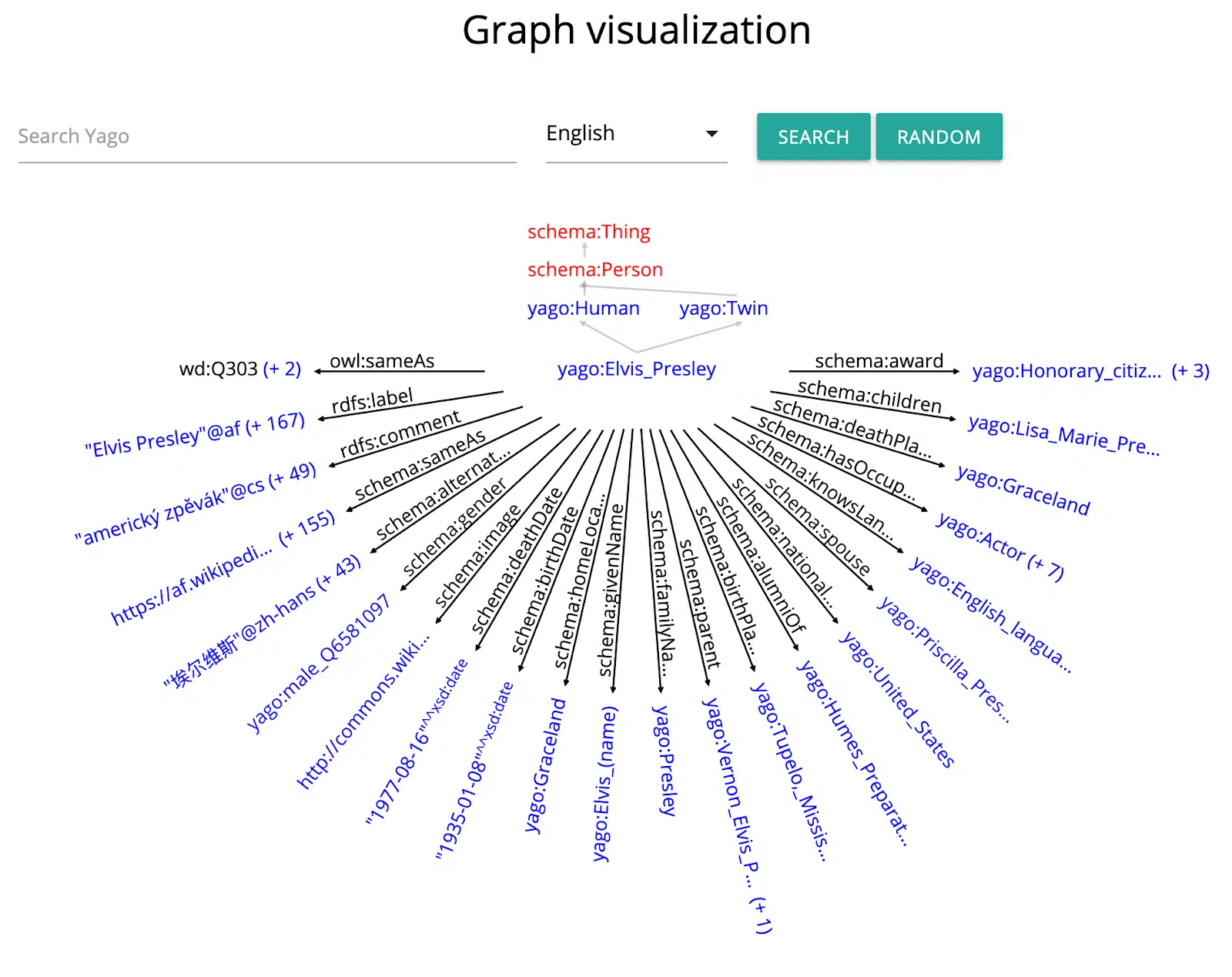

- Yago

Entities assist to bridge the hole between the worlds of unstructured and structured information.

They can be utilized to semantically enrich unstructured textual content, whereas textual sources could also be utilized to populate structured data bases.

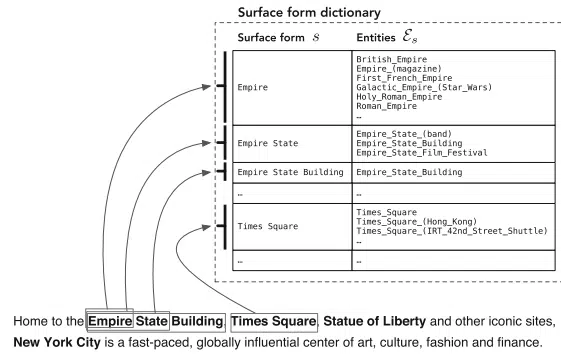

Recognizing mentions of entities in textual content and associating these mentions with the corresponding entries in a data base is called the duty of entity linking.

Entities enable for a greater understanding of the which means of textual content, each for people and for machines.

While people can comparatively simply resolve the paradox of entities based mostly on the context wherein they’re talked about, this presents many difficulties and challenges for machines.

The data base entry of an entity summarizes what we find out about that entity.

As the world is continually altering, so are new details surfacing. Keeping up with these adjustments requires a steady effort from editors and content material managers. This is a demanding activity at scale.

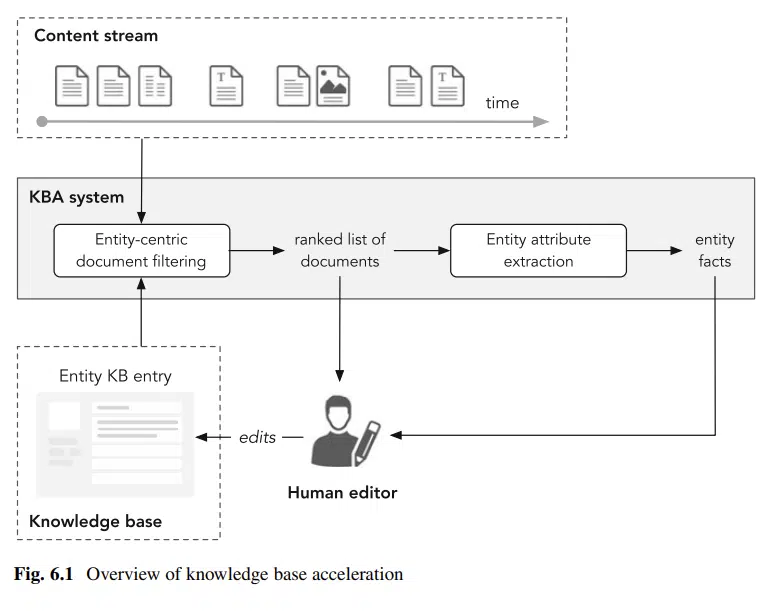

By analyzing the contents of paperwork wherein entities are talked about, the method of discovering new details or details that want updating could also be supported and even totally automated.

Scientists consult with this as the issue of information base inhabitants, which is why entity linking is vital.

Entities facilitate a semantic understanding of the person’s data want, as expressed by the key phrase question, and the doc’s content material. Entities thus could also be used to enhance question and/or doc representations.

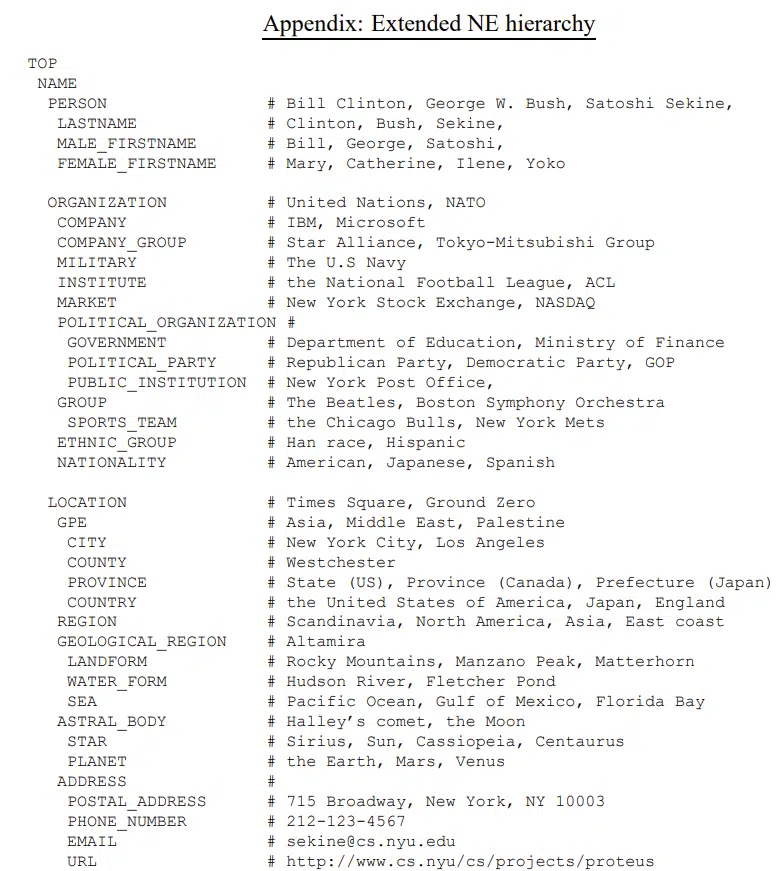

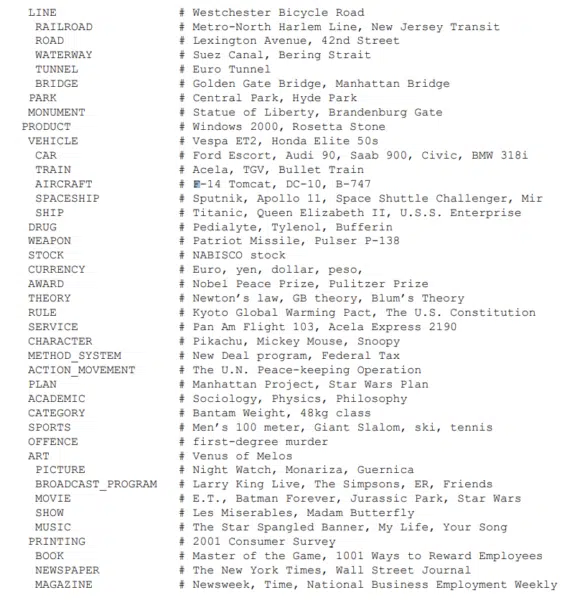

In the Extended Named Entity analysis paper, the creator identifies round 160 entity sorts. Here are two of seven screenshots from the checklist.

Certain classes of entities are extra simply outlined, however it’s vital to do not forget that ideas and concepts are entities. Those two classes are very troublesome for Google to scale by itself.

You can’t train Google with only a single web page when working with obscure ideas. Entity understanding requires many articles and lots of references sustained over time.

Google’s historical past with entities

On July 16, 2010, Google purchased Freebase. This buy was the primary main step that led to the present entity search system.

After investing in Freebase, Google realized that Wikidata had a greater resolution. Google then labored to merge Freebase into Wikidata, a activity that was far harder than anticipated.

Five Google scientists wrote a paper titled “From Freebase to Wikidata: The Great Migration.” Key takeaways embody.

“Freebase is constructed on the notions of objects, details, sorts, and properties. Each Freebase object has a secure identifier referred to as a “mid” (for Machine ID).”

“Wikidata’s information mannequin depends on the notions of merchandise and assertion. An merchandise represents an entity, has a secure identifier referred to as “qid”, and will have labels, descriptions, and aliases in a number of languages; additional statements and hyperlinks to pages concerning the entity in different Wikimedia tasks – most prominently Wikipedia. Contrary to Freebase, Wikidata statements don’t purpose to encode true details, however claims from totally different sources, which may additionally contradict one another…”

Entities are outlined in these data bases, however Google nonetheless needed to construct its entity data for unstructured information (i.e., blogs).

Google partnered with Bing and Yahoo and created Schema.org to perform this activity.

Google supplies schema directions so web site managers can have instruments that assist Google perceive the content material. Remember, Google desires to deal with issues, not strings.

In Google’s phrases:

“You will help us by offering express clues concerning the which means of a web page to Google by together with structured information on the web page. Structured information is a standardized format for offering details about a web page and classifying the web page content material; for instance, on a recipe web page, what are the elements, the cooking time and temperature, the energy, and so forth.”

Google continues by saying:

“You should embody all of the required properties for an object to be eligible for look in Google Search with enhanced show. In basic, defining extra really helpful options could make it extra possible that your data can seem in Search outcomes with enhanced show. However, it’s extra vital to produce fewer however full and correct really helpful properties somewhat than making an attempt to offer each attainable really helpful property with much less full, badly-formed, or inaccurate information.”

More might be mentioned about schema, however suffice it to say schema is an unimaginable software for SEOs trying to make web page content material clear to engines like google.

The final piece of the puzzle comes from Google’s weblog announcement titled “Improving Search for The Next 20 Years.”

Document relevance and high quality are the principle concepts behind this announcement. The first methodology Google used for figuring out the content material of a web page was totally centered on key phrases.

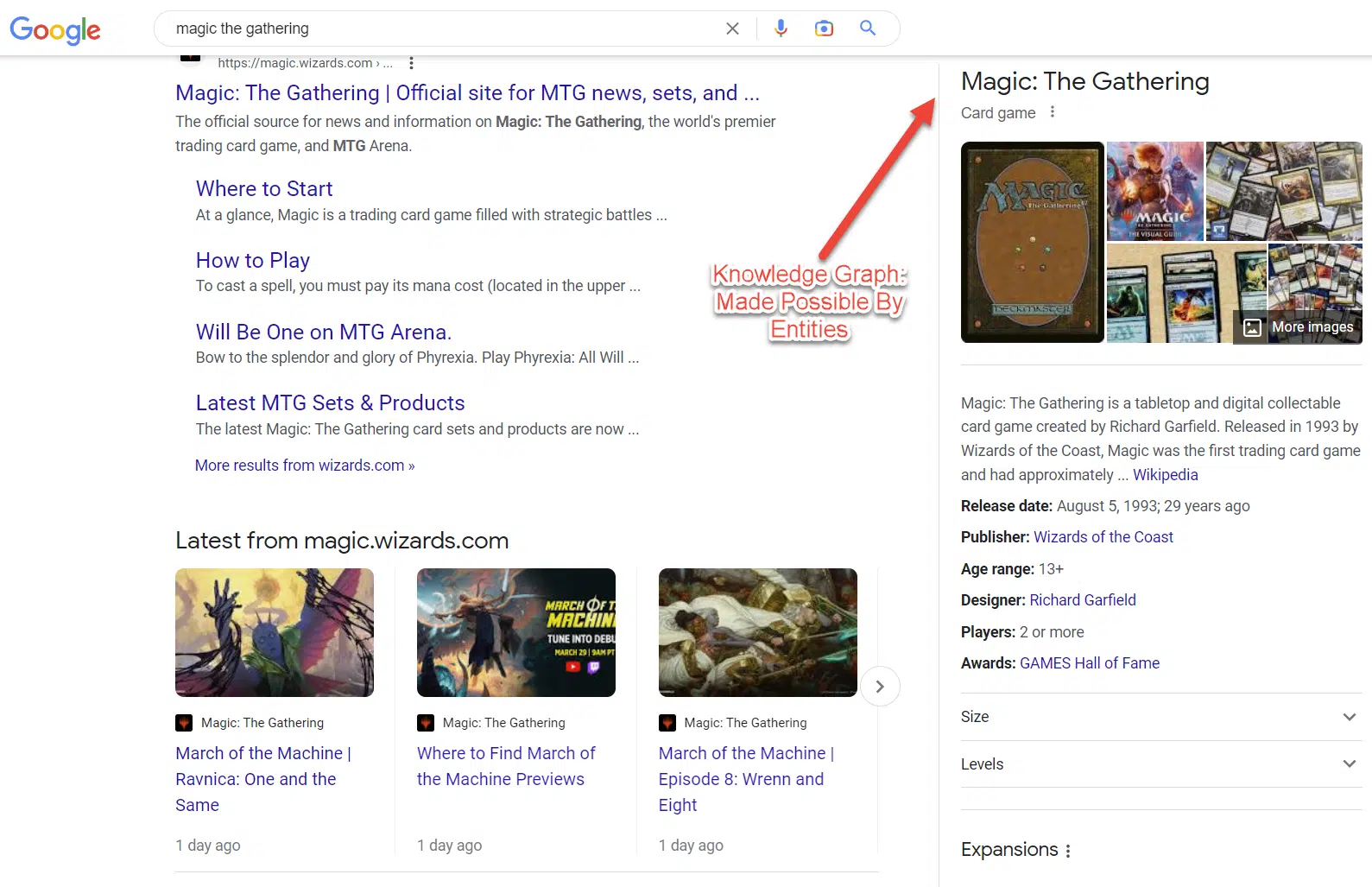

Google then added subject layers to look. This layer was made attainable by data graphs and by systematically scraping and structuring information throughout the online.

That brings us to the present search system. Google went from 570 million entities and 18 billion details to 800 billion details and eight billion entities in lower than 10 years. As this quantity grows, entity search improves.

How is the entity mannequin an enchancment from earlier search fashions?

Traditional keyword-based data retrieval (IR) fashions have an inherent limitation of not with the ability to retrieve (related) paperwork that don’t have any express time period matches with the question.

If you utilize ctrl + f to seek out textual content on a web page, you utilize one thing just like the standard keyword-based data retrieval mannequin.

An insane quantity of information is revealed on the net each day.

It merely isn’t possible for Google to know the which means of each phrase, each paragraph, each article, and each web site.

Instead, entities present a construction from which Google can reduce the computational load whereas bettering understanding.

“Concept-based retrieval strategies try and sort out this problem by counting on auxiliary buildings to acquire semantic representations of queries and paperwork in a higher-level idea area. Such buildings embody managed vocabularies (dictionaries and thesauri), ontologies, and entities from a data repository.”

– Entity-Oriented Search, Chapter 8.3

Krisztian Balog, who wrote the definitive e book on entities, identifies three attainable options to the standard data retrieval mannequin.

- Expansion-based: Uses entities as a supply for increasing the question with totally different phrases.

- Projection-based: The relevance between a question and a doc is known by projecting them onto a latent area of entities

- Entity-based: Explicit semantic representations of queries and paperwork are obtained within the entity area to enhance the term-based representations.

The objective of those three approaches is to achieve a richer illustration of the person’s data wanted by figuring out entities strongly associated to the question.

Balog then identifies six algorithms related to projection-based strategies of entity mapping (projection strategies relate to changing entities into three-dimensional area and measuring vectors utilizing geometry).

- Explicit semantic evaluation (ESA): The semantics of a given phrase are described by a vector storing the phrase’s affiliation strengths to Wikipedia-derived ideas.

- Latent entity area mannequin (LES): Based on a generative probabilistic framework. The doc’s retrieval rating is taken to be a linear mixture of the latent entity area rating and the unique question chance rating.

- EsdRank: EsdRank is for rating paperwork, utilizing a mix of query-entity and entity-document options. These correspond to the notions of question projection and doc projection parts of LES, respectively, from earlier than. Using a discriminative studying framework, extra indicators will also be integrated simply, similar to entity reputation or doc high quality

- Explicit semantic rating (ESR): The express semantic rating mannequin incorporates relationship data from a data graph to allow “tender matching” within the entity area.

- Word-entity duet framework: This incorporates cross-space interactions between term-based and entity-based representations, resulting in 4 varieties of matches: question phrases to doc phrases, question entities to doc phrases, question phrases to doc entities, and question entities to doc entities.

- Attention-based rating mannequin: This is by far essentially the most sophisticated one to explain.

Here is what Balog writes:

“A complete of 4 consideration options are designed, that are extracted for every question entity. Entity ambiguity options are supposed to characterize the danger related to an entity annotation. These are: (1) the entropy of the likelihood of the floor kind being linked to totally different entities (e.g., in Wikipedia), (2) whether or not the annotated entity is the most well-liked sense of the floor kind (i.e., has the best commonness rating, and (3) the distinction in commonness scores between the most definitely and second most definitely candidates for the given floor kind. The fourth function is closeness, which is outlined because the cosine similarity between the question entity and the question in an embedding area. Specifically, a joint entity-term embedding is skilled utilizing the skip-gram mannequin on a corpus, the place entity mentions are changed with the corresponding entity identifiers. The question’s embedding is taken to be the centroid of the question phrases’ embeddings.”

For now, you will need to have surface-level familiarity with these six entity-centric algorithms.

The essential takeaway is that two approaches exist: projecting paperwork to a latent entity layer and express entity annotations of paperwork.

Three varieties of information buildings

The picture above exhibits the advanced relationships that exist in vector area. While the instance exhibits data graph connections, this identical sample will be replicated on a page-by-page schema stage.

To perceive entities, you will need to know the three varieties of information buildings that algorithms use.

- Using unstructured entity descriptions, references to different entities should be acknowledged and disambiguated. Directed edges (hyperlinks) are added from every entity to all the opposite entities talked about in its description.

- In a semi-structured setting (i.e., Wikipedia), hyperlinks to different entities could be explicitly supplied.

- When working with structured information, RDF triples outline a graph (i.e., the data graph). Specifically, topic and object sources (URIs) are nodes, and predicates are edges.

The downside with a semi-structured and distracting context for IR rating is that if a doc isn’t configured for a single subject, the IR rating will be diluted by the 2 totally different contexts leading to a relative rank misplaced to a different textual doc.

IR rating dilution entails poorly structured lexical relations and dangerous phrase proximity.

The related phrases that full one another needs to be used carefully inside a paragraph or part of the doc to sign the context extra clearly to extend the IR Score.

Utilizing entity attributes and relationships yields relative enhancements within the 5–20% vary. Exploiting entity-type data is much more rewarding, with relative enhancements starting from 25% to over 100%.

Annotating paperwork with entities can carry construction to unstructured paperwork, which will help populate data bases with new details about entities.

Using Wikipedia as your entity web optimization framework

Structure of Wikipedia pages

- Title (I.)

- Lead part (II.)

- Disambiguation hyperlinks (II.a)

- Infobox (II.b)

- Introductory textual content (II.c)

- Table of contents (III.)

- Body content material (IV.)

- Appendices and backside matter (V.)

- References and notes (V.a)

- External hyperlinks (V.b)

- Categories (V.c)

Most Wikipedia articles embody an introductory textual content, the “lead,” a quick abstract of the article – usually, not more than 4 paragraphs lengthy. This needs to be written in a means that creates curiosity within the article.

The first sentence and the opening paragraph bear particular significance. The first sentence “will be considered the definition of the entity described within the article.” The first paragraph presents a extra elaborate definition with out an excessive amount of element.

The worth of hyperlinks extends past navigational functions; they seize semantic relationships between articles. In addition, anchor texts are a wealthy supply of entity title variants. Wikipedia hyperlinks could also be used, amongst others, to assist determine and disambiguate entity mentions in textual content.

- Summarize key details concerning the entity (infobox).

- Brief introduction.

- Internal Links. A key rule given to editors is to hyperlink solely to the primary incidence of an entity or idea.

- Include all fashionable synonyms for an entity.

- Category web page designation.

- Navigation Template.

- References.

- Special Parsing instruments for understanding Wiki Pages.

- Multiple Media Types.

How to optimize for entities

What follows are key issues when optimizing entities for search:

- The inclusion of semantically associated phrases on a web page.

- Word and phrase frequency on a web page.

- The group of ideas on a web page.

- Including unstructured information, semi-structured information, and structured information on a web page.

- Subject-Predicate-Object Pairs (SPO).

- Web paperwork on a website that operate as pages of a e book.

- Organization of net paperwork on an internet site.

- Include ideas on an online doc which are identified options of entities.

Important word: When the emphasis is on the relationships between entities, a data base is sometimes called a data graph.

Since intent is being analyzed along side person search logs and different bits of context, the identical search phrase from individual 1 might generate a unique outcome from individual 2. The individual might have a unique intent with the very same question.

If your web page covers each varieties of intent, then your web page is a greater candidate for net rating. You can use the construction of information bases to guide your query-intent templates (as talked about in a earlier part).

People Also Ask, People Search For, and Autocomplete are semantically associated to the submitted question and both dive deeper into the present search path or transfer to a unique facet of the search activity.

We know this, so how can we optimize for it?

Your paperwork ought to comprise as many search intent variations as attainable. Your web site ought to comprise each search intent variation on your cluster. Clustering depends on three varieties of similarity:

- Lexical similarity.

- Semantic similarity.

- Click similarity.

Topic protection

What is it –> Attribute checklist –> Section devoted to every attribute –> Each part hyperlinks to an article totally devoted to that subject –> The viewers needs to be specified and definitions for the sub-section needs to be specified –> What needs to be thought of? –> What are the advantages? –> Modifier advantages –> What is ___ –> What does it do? –> How to get it –> How to do it –> Who can do it –> Link again to all classes

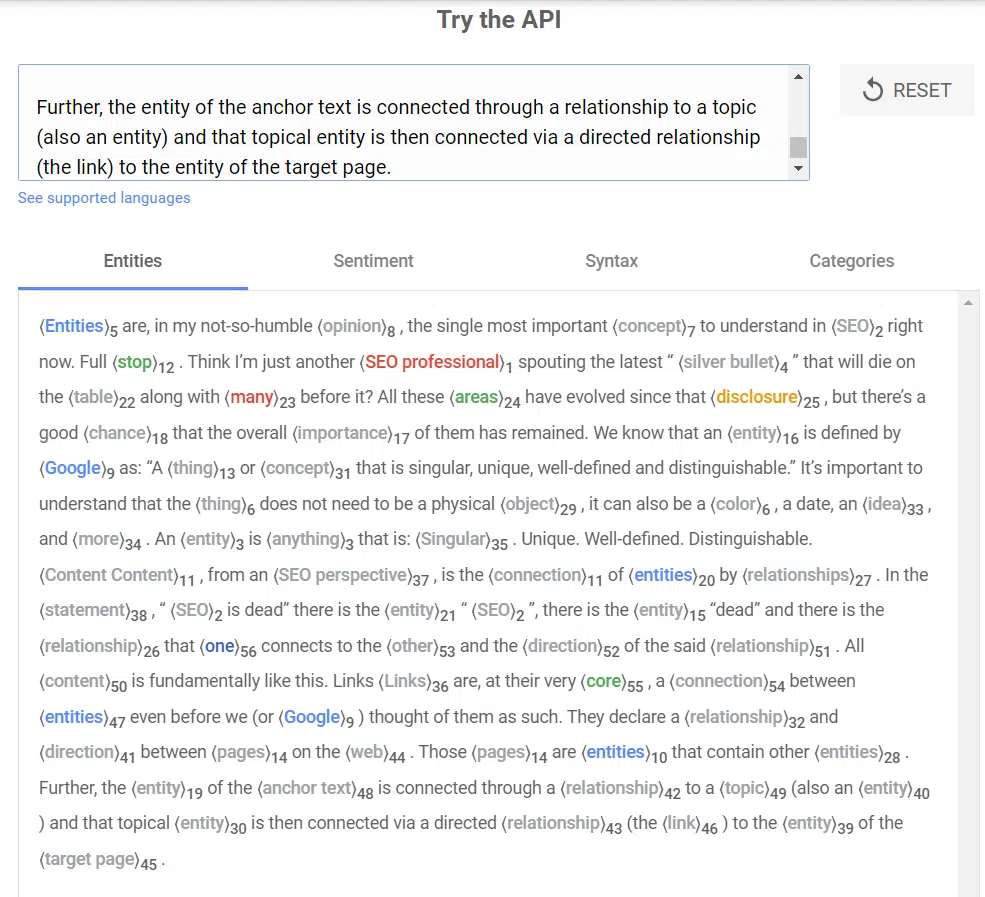

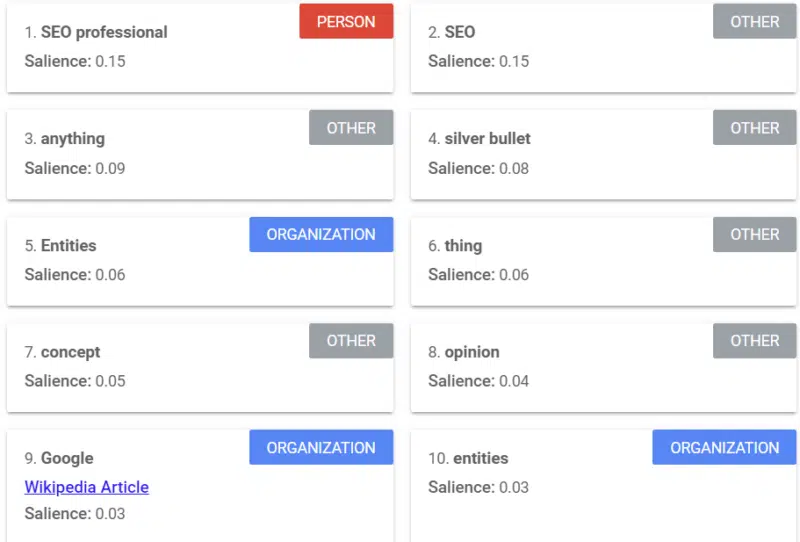

Google presents a software that gives a salience rating (just like how we use the phrase “power” or “confidence”) that tells you ways Google sees the content material.

The instance above comes from a Search Engine Land article on entities from 2018.

You can see individual, different, and organizations from the instance. The software is Google Cloud’s Natural Language API.

Every phrase, sentence, and paragraph matter when speaking about an entity. How you set up your ideas can change Google’s understanding of your content material.

You might embody a key phrase about web optimization, however does Google perceive that key phrase the best way you need it to be understood?

Try inserting a paragraph or two into the software and reorganizing and modifying the instance to see the way it will increase or decreases salience.

This train, referred to as “disambiguation,” is extremely vital for entities. Language is ambiguous, so we should make our phrases much less ambiguous to Google.

Modern disambiguation approaches think about three varieties of proof:

Prior significance of entities and mentions.

Contextual similarity between the textual content surrounding the point out and the candidate entity and coherence amongst all entity-linking choices within the doc.

Schema is one in all my favourite methods of disambiguating content material. You are linking entities in your weblog to data repositories. Balog says:

“[L]inking entities in unstructured textual content to a structured data repository can tremendously empower customers of their data consumption actions.”

For occasion, readers of a doc can purchase contextual or background data with a single click on, and so they can acquire quick access to associated entities.

Entity annotations will also be utilized in downstream processing to enhance retrieval efficiency or to facilitate higher person interplay with search outcomes.

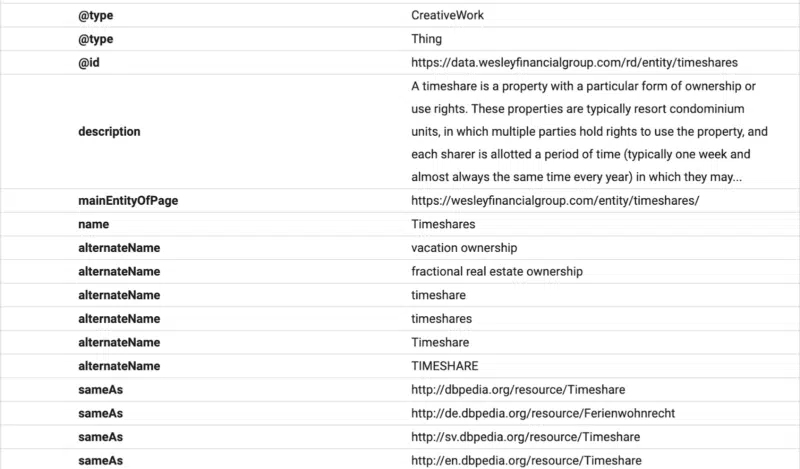

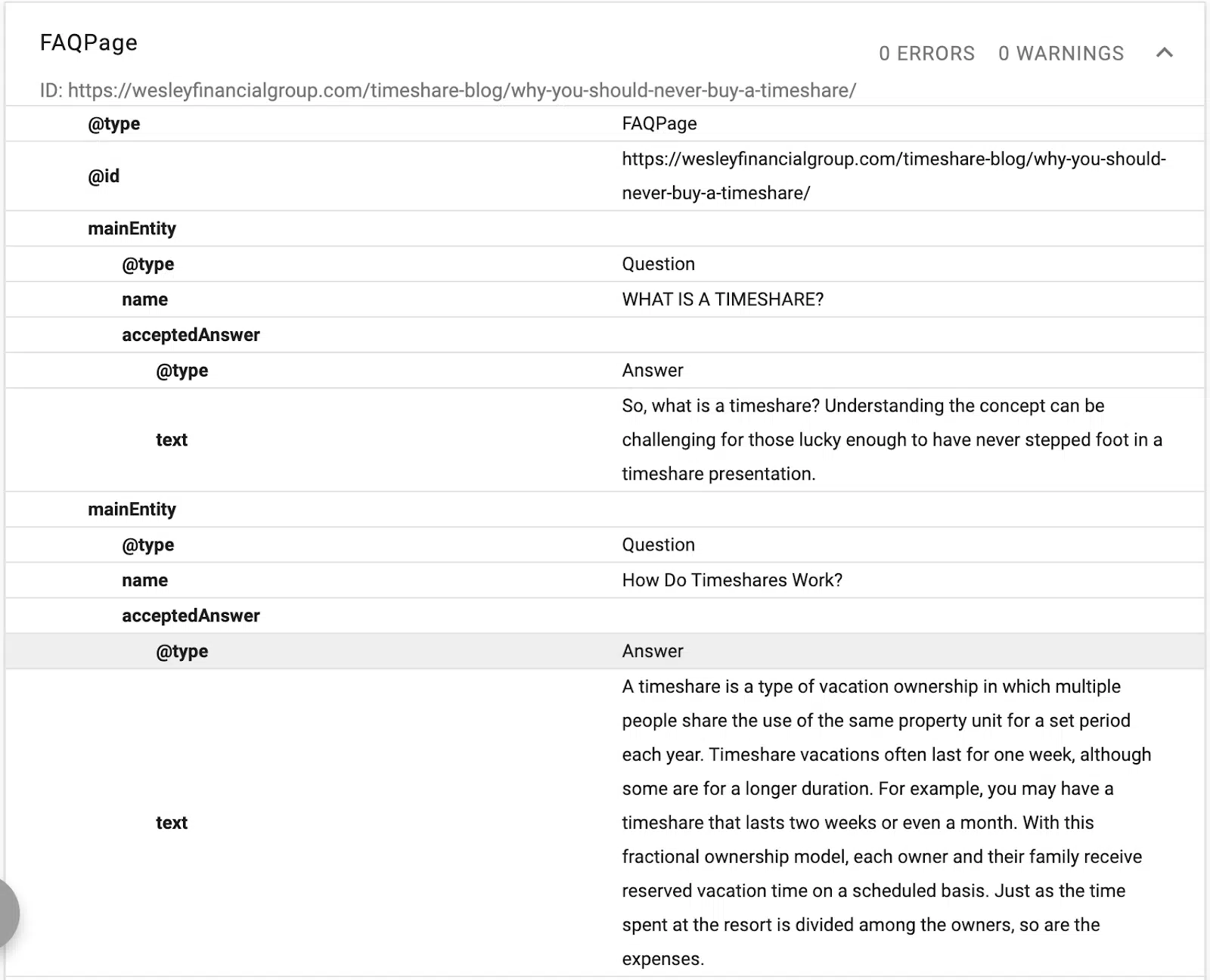

Here you may see that the FAQ content material is structured for Google utilizing FAQ schema.

In this instance, you may see schema offering an outline of the textual content, an ID, and a declaration of the principle entity of the web page.

(Remember, Google desires to know the hierarchy of the content material, which is why H1–H6 is vital.)

You’ll see various names and the identical as declarations. Now, when Google reads the content material, it’ll know which structured database to affiliate with the textual content, and it’ll have synonyms and various variations of a phrase linked to the entity.

When you optimize with schema, you optimize for NER (named entity recognition), also referred to as entity identification, entity extraction, and entity chunking.

The thought is to interact in Named Entity Disambiguation > Wikification > Entity Linking.

“The introduction of Wikipedia has facilitated large-scale entity recognition and disambiguation by offering a complete catalog of entities together with different invaluable sources (particularly, hyperlinks, classes, and redirection and disambiguation pages.”

– Entity-Oriented Search

Most SEOs use some on-page software for optimizing their content material. Every software is proscribed in its means to determine distinctive content material alternatives and content material depth options.

For essentially the most half, on-page instruments are simply aggregating the highest SERP outcomes and creating a median so that you can emulate.

SEOs should do not forget that Google isn’t searching for the identical rehashed data. You can copy what others are doing, however distinctive data is the important thing to changing into a seed website/authority website.

Here is a simplified description of how Google handles new content material:

Once a doc has been discovered to say a given entity, that doc could also be checked to probably uncover new details with which the data base entry of that entity could also be up to date.

Balog writes:

“We want to assist editors keep on prime of adjustments by routinely figuring out content material (information articles, weblog posts, and so on.) that will suggest modifications to the KB entries of a sure set of entities of curiosity (i.e., entities {that a} given editor is chargeable for).”

Anyone that improves data bases, entity recognition, and crawlability of data will get Google’s love.

Changes made within the data repository will be traced again to the doc as the unique supply.

If you present content material that covers the subject and also you add a stage of depth that’s uncommon or new, Google can determine in case your doc added that distinctive data.

Eventually, this new data sustained over a time period might result in your web site changing into an authority.

This isn’t an authoritativeness based mostly on area ranking however topical protection, which I consider is way extra helpful.

With the entity strategy to web optimization, you aren’t restricted to concentrating on key phrases with search quantity.

All you’ll want to do is to validate the pinnacle time period (“fly fishing rods,” for instance), after which you may deal with concentrating on search intent variations based mostly on good ole style human considering.

We start with Wikipedia. For the instance of fly fishing, we are able to see that, at a minimal, the next ideas needs to be lined on a fishing web site:

- Fish species, historical past, origins, growth, technological enhancements, enlargement, strategies of fly fishing, casting, spey casting, fly fishing for trout, strategies for fly fishing, fishing in chilly water, dry fly trout fishing, nymphing for trout, nonetheless water trout fishing, enjoying trout, releasing trout, saltwater fly fishing, sort out, synthetic flies, and knots.

The subjects above got here from the fly fishing Wikipedia web page. While this web page supplies an important overview of subjects, I like so as to add extra subject concepts that come from semantically associated subjects.

For the subject “fish,” we are able to add a number of extra subjects, together with etymology, evolution, anatomy and physiology, fish communication, fish illnesses, conservation, and significance to people.

Has anybody linked the anatomy of trout to the effectiveness of sure fishing strategies?

Has a single fishing web site lined all fish varieties whereas linking the varieties of fishing strategies, rods, and bait to every fish?

By now, it’s best to be capable of see how the subject enlargement can develop. Keep this in thoughts when planning a content material marketing campaign.

Don’t simply rehash. Add worth. Be distinctive. Use the algorithms talked about on this article as your guide.

Conclusion

This article is a part of a collection of articles centered on entities. In the following article, I’ll dive deeper into the optimization efforts round entities and a few entity-focused instruments in the marketplace.

I wish to finish this text by giving a shout-out to 2 people who defined many of those ideas to me.

Bill Slawski of web optimization by the Sea and Koray Tugbert of Holistic web optimization. While Slawski is not with us, his contributions proceed to have a ripple impact within the web optimization trade.

I closely depend on the next sources for the article content material, as these sources are the perfect sources that exist on the subject:

Opinions expressed on this article are these of the visitor creator and never essentially Search Engine Land. Staff authors are listed here.