There’s rather a lot to learn about search intent, from utilizing deep learning to infer search intent by classifying textual content and breaking down SERP titles using Natural Language Processing (NLP) methods, to clustering based on semantic relevance with the advantages defined.

Not solely do we all know the advantages of deciphering search intent — now we have a quantity of methods at our disposal for scale and automation, too.

But typically, these contain constructing your personal AI. What should you don’t have the time nor the information for that?

In this column, you’ll study a step-by-step course of for automating key phrase clustering by search intent utilizing Python.

Advertisement

Continue Reading Below

SERPs Contain Insights for Search Intent

Some strategies require that you simply get all of the copy from titles of the rating content material for a given key phrase, then feed it right into a neural community mannequin (which you’ve gotten to then construct and take a look at), or possibly you’re utilizing NLP to cluster key phrases.

There is one other technique that permits you to use Google’s very personal AI to do the be just right for you, with out having to scrape all the SERPs content material and construct an AI mannequin.

Let’s assume that Google ranks website URLs by the probability of the content material satisfying the person question in descending order. It follows that if the intent for 2 key phrases is the similar, then the SERPs are possible to be comparable.

Advertisement

Continue Reading Below

For years, many web optimization professionals in contrast SERP outcomes for keywords to infer shared (or shared) search intent to keep on prime of Core Updates, so that is nothing new.

The value-add right here is the automation and scaling of this comparability, providing each velocity and higher precision.

How to Cluster Keywords by Search Intent at Scale Using Python (With Code)

Begin together with your SERPs ends in a CSV obtain.

1. Import the checklist into your Python pocket book.

import pandas as pd

import numpy as np

serps_input = pd.read_csv('knowledge/sej_serps_input.csv')

del serps_input['Unnamed: 0']

serps_input

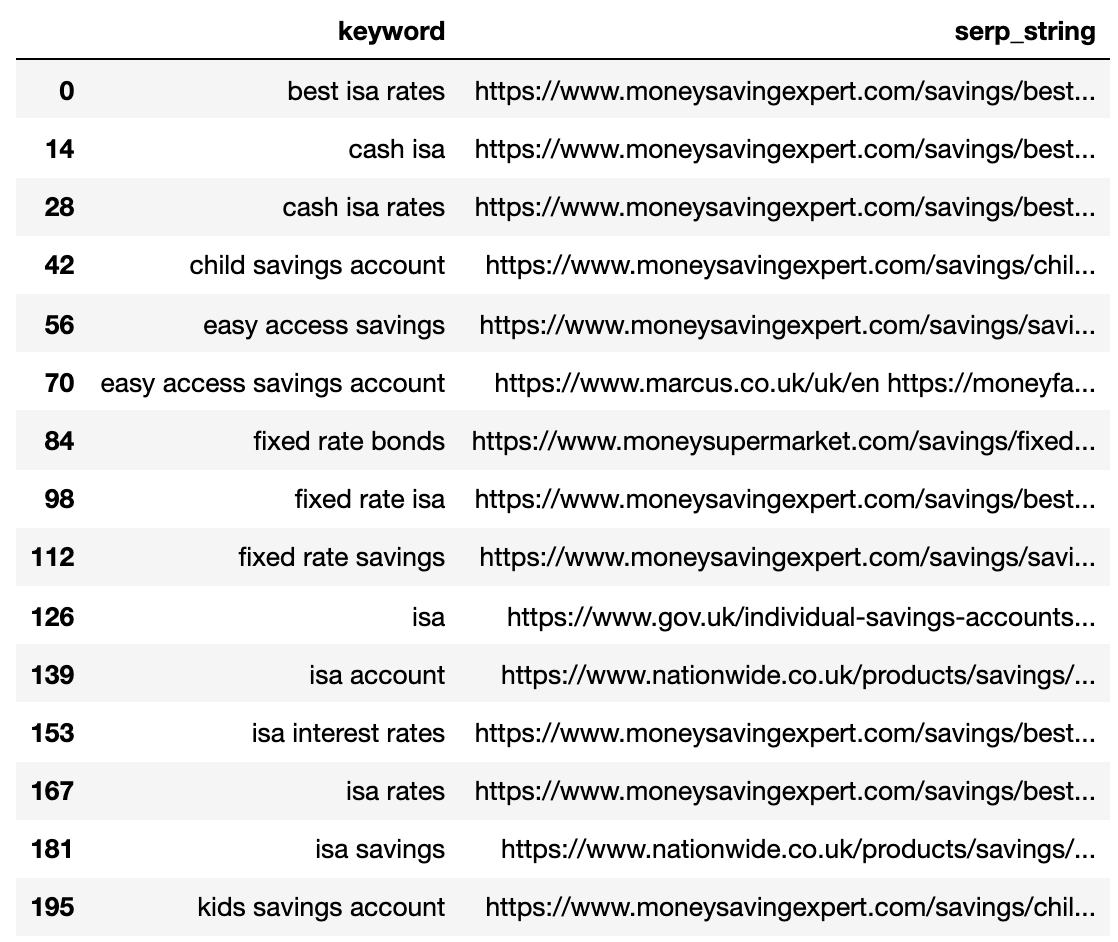

Below is the SERPs file now imported right into a Pandas dataframe.

2. Filter Data for Page 1

We need to examine the Page 1 outcomes of every SERP between key phrases.

We’ll break up the dataframe into mini key phrase dataframes to run the filtering perform earlier than recombining right into a single dataframe, as a result of we wish to filter at key phrase stage:

# Split

serps_grpby_keyword = serps_input.groupby("key phrase")

k_urls = 15

# Apply Combine

def filter_k_urls(group_df):

filtered_df = group_df.loc[group_df['url'].notnull()]

filtered_df = filtered_df.loc[filtered_df['rank'] <= k_urls]

return filtered_df

filtered_serps = serps_grpby_keyword.apply(filter_k_urls)

# Combine

## Add prefix to column names

#normed = normed.add_prefix('normed_')

# Concatenate with preliminary knowledge body

filtered_serps_df = pd.concat([filtered_serps],axis=0)

del filtered_serps_df['keyword']

filtered_serps_df = filtered_serps_df.reset_index()

del filtered_serps_df['level_1']

filtered_serps_df

3. Convert Ranking URLs to a String

Because there are extra SERP consequence URLs than key phrases, we'd like to compress these URLs right into a single line to signify the key phrase’s SERP.

Here’s how:

# convert outcomes to strings utilizing Split Apply Combine

filtserps_grpby_keyword = filtered_serps_df.groupby("key phrase")

def string_serps(df):

df['serp_string'] = ''.be part of(df['url'])

return df

# Combine

strung_serps = filtserps_grpby_keyword.apply(string_serps)

# Concatenate with preliminary knowledge body and clear

strung_serps = pd.concat([strung_serps],axis=0)

strung_serps = strung_serps[['keyword', 'serp_string']]#.head(30)

strung_serps = strung_serps.drop_duplicates()

strung_serps

Below exhibits the SERP compressed right into a single line for every key phrase.

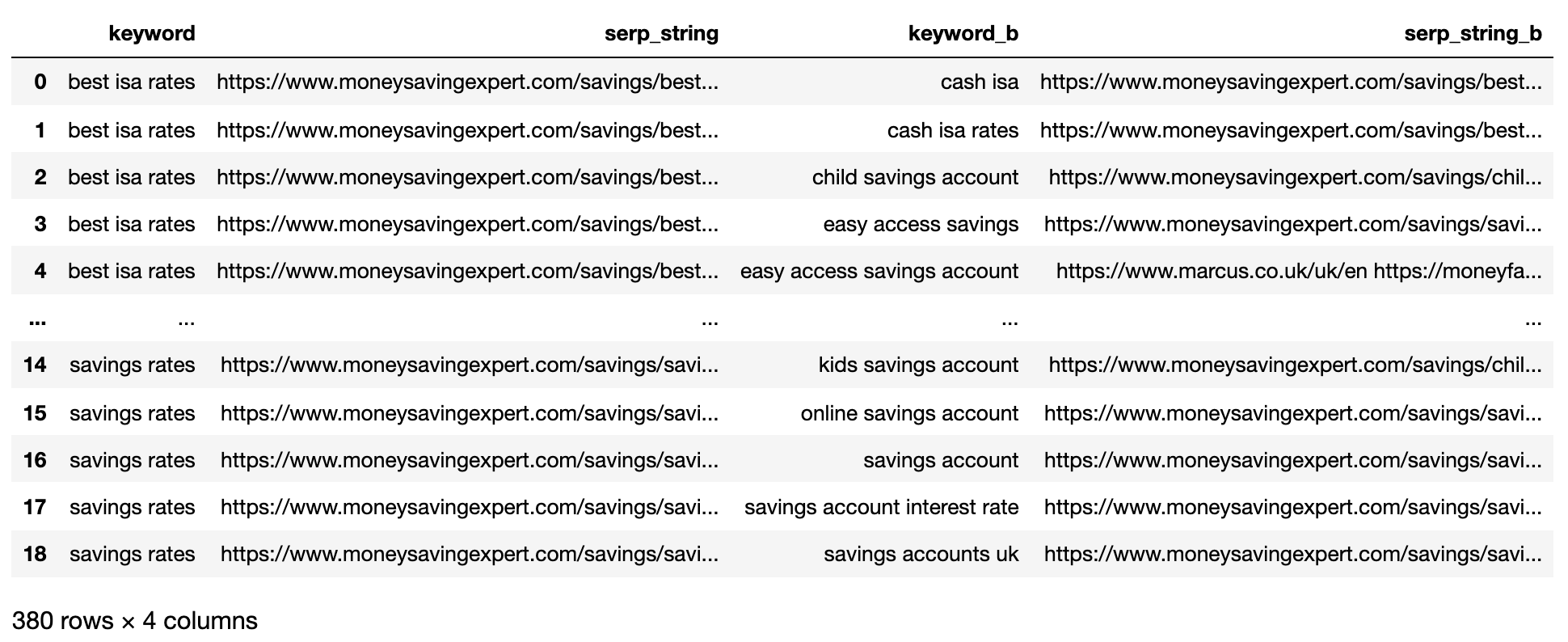

4. Compare SERP Similarity

To carry out the comparability, we now want each mixture of key phrase SERP paired with different pairs:

Advertisement

Continue Reading Below

# align serps

def serps_align(ok, df):

prime_df = df.loc[df.keyword == k]

prime_df = prime_df.rename(columns = {"serp_string" : "serp_string_a", 'key phrase': 'keyword_a'})

comp_df = df.loc[df.keyword != k].reset_index(drop=True)

prime_df = prime_df.loc[prime_df.index.repeat(len(comp_df.index))].reset_index(drop=True)

prime_df = pd.concat([prime_df, comp_df], axis=1)

prime_df = prime_df.rename(columns = {"serp_string" : "serp_string_b", 'key phrase': 'keyword_b', "serp_string_a" : "serp_string", 'keyword_a': 'key phrase'})

return prime_df

columns = ['keyword', 'serp_string', 'keyword_b', 'serp_string_b']

matched_serps = pd.DataBody(columns=columns)

matched_serps = matched_serps.fillna(0)

queries = strung_serps.key phrase.to_list()

for q in queries:

temp_df = serps_align(q, strung_serps)

matched_serps = matched_serps.append(temp_df)

matched_serps

The above exhibits all of the key phrase SERP pair mixtures, making it prepared for SERP string comparability.

There is not any open supply library that compares checklist objects by order, so the perform has been written for you under.

Advertisement

Continue Reading Below

The perform ‘serp_compare’ compares the overlap of websites and the order of these websites between SERPs.

import py_stringmatching as sm

ws_tok = sm.WhitespaceTokenizer()

# Only examine the prime k_urls outcomes

def serps_similarity(serps_str1, serps_str2, ok=15):

denom = ok+1

norm = sum([2*(1/i - 1.0/(denom)) for i in range(1, denom)])

ws_tok = sm.WhitespaceTokenizer()

serps_1 = ws_tok.tokenize(serps_str1)[:k]

serps_2 = ws_tok.tokenize(serps_str2)[:k]

match = lambda a, b: [b.index(x)+1 if x in b else None for x in a]

pos_intersections = [(i+1,j) for i,j in enumerate(match(serps_1, serps_2)) if j is not None]

pos_in1_not_in2 = [i+1 for i,j in enumerate(match(serps_1, serps_2)) if j is None]

pos_in2_not_in1 = [i+1 for i,j in enumerate(match(serps_2, serps_1)) if j is None]

a_sum = sum([abs(1/i -1/j) for i,j in pos_intersections])

b_sum = sum([abs(1/i -1/denom) for i in pos_in1_not_in2])

c_sum = sum([abs(1/i -1/denom) for i in pos_in2_not_in1])

intent_prime = a_sum + b_sum + c_sum

intent_dist = 1 - (intent_prime/norm)

return intent_dist

# Apply the perform

matched_serps['si_simi'] = matched_serps.apply(lambda x: serps_similarity(x.serp_string, x.serp_string_b), axis=1)

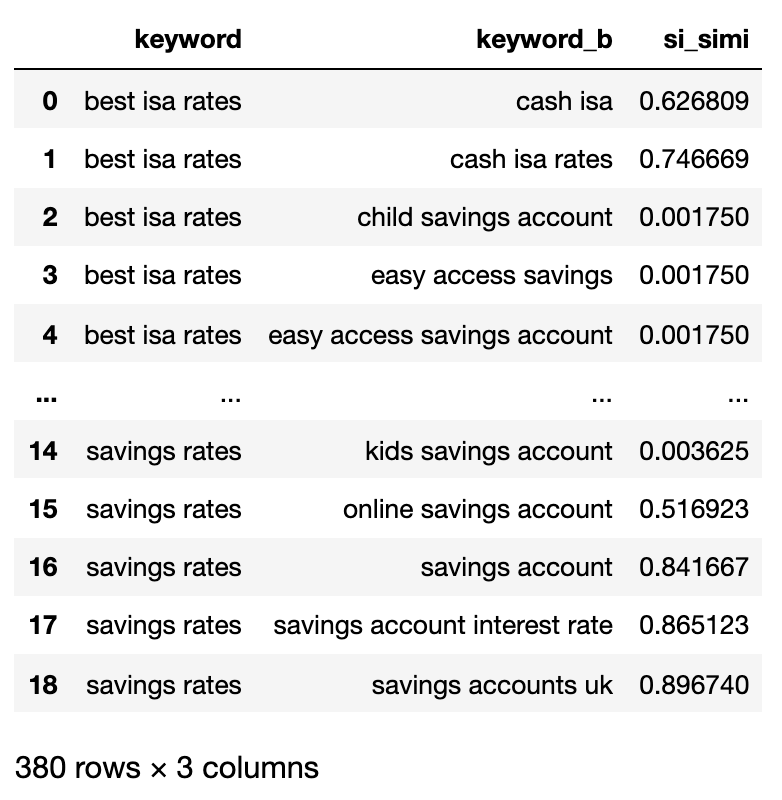

matched_serps[["keyword", "keyword_b", "si_simi"]]

Now that the comparisons have been executed, we are able to begin clustering key phrases.

Advertisement

Continue Reading Below

We will likely be treating any key phrases which have a weighted similarity of 40% or extra.

# group key phrases by search intent

simi_lim = 0.4

# be part of search quantity

keysv_df = serps_input[['keyword', 'search_volume']].drop_duplicates()

keysv_df.head()

# append matter vols

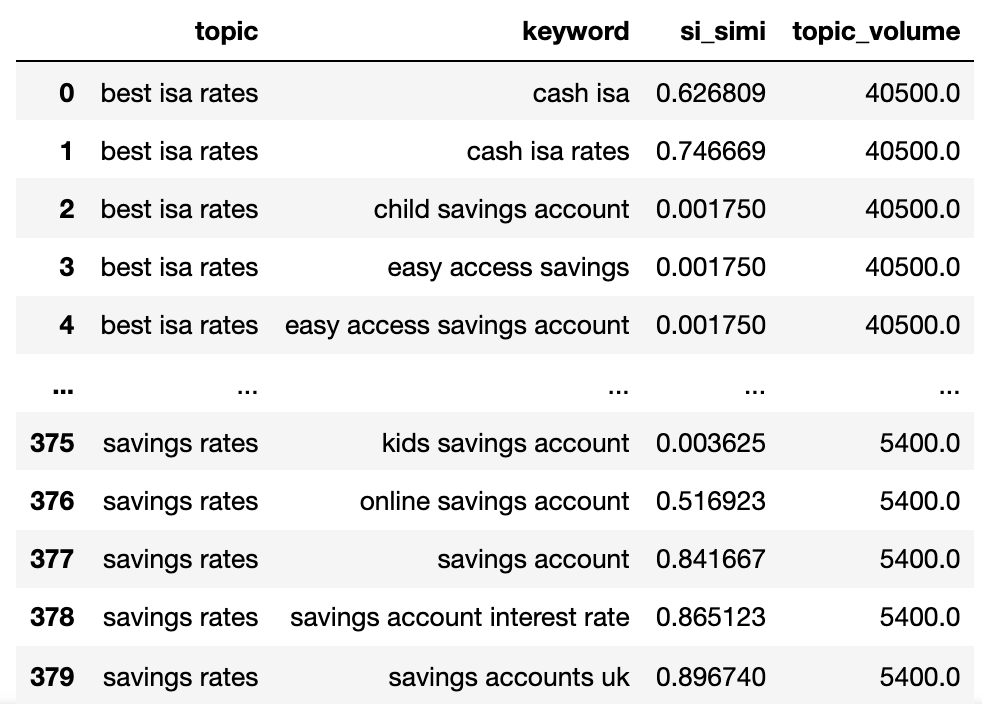

keywords_crossed_vols = serps_compared.merge(keysv_df, on = 'key phrase', how = 'left')

keywords_crossed_vols = keywords_crossed_vols.rename(columns = {'key phrase': 'matter', 'keyword_b': 'key phrase',

'search_volume': 'topic_volume'})

# sim si_simi

keywords_crossed_vols.sort_values('topic_volume', ascending = False)

# strip NANs

keywords_filtered_nonnan = keywords_crossed_vols.dropna()

keywords_filtered_nonnan

We now have the potential matter identify, key phrases SERP similarity, and search volumes of every.

You’ll be aware that key phrase and keyword_b have been renamed to matter and key phrase, respectively.

Advertisement

Continue Reading Below

Now we’re going to iterate over the columns in the dataframe utilizing the lamdas approach.

The lamdas approach is an environment friendly manner to iterate over rows in a Pandas dataframe as a result of it converts rows to a listing as opposed to the .iterrows() perform.

Here goes:

queries_in_df = checklist(set(keywords_filtered_nonnan.matter.to_list()))

topic_groups_numbered = {}

topics_added = []

def find_topics(si, keyw, topc):

i = latest_index(topic_groups_numbered)

if (si >= simi_lim) and (not keyw in topics_added) and (not topc in topics_added):

i += 1

topics_added.append(keyw)

topics_added.append(topc)

topic_groups_numbered[i] = [keyw, topc]

elif si >= simi_lim and (keyw in topics_added) and (not topc in topics_added):

j = [key for key, value in topic_groups_numbered.items() if keyw in value]

topics_added.append(topc)

topic_groups_numbered[j[0]].append(topc)

elif si >= simi_lim and (not keyw in topics_added) and (topc in topics_added):

j = [key for key, value in topic_groups_numbered.items() if topc in value]

topics_added.append(keyw)

topic_groups_numbered[j[0]].append(keyw)

def apply_impl_ft(df):

return df.apply(

lambda row:

find_topics(row.si_simi, row.key phrase, row.matter), axis=1)

apply_impl_ft(keywords_filtered_nonnan)

topic_groups_numbered = {ok:checklist(set(v)) for ok, v in topic_groups_numbered.objects()}

topic_groups_numbered

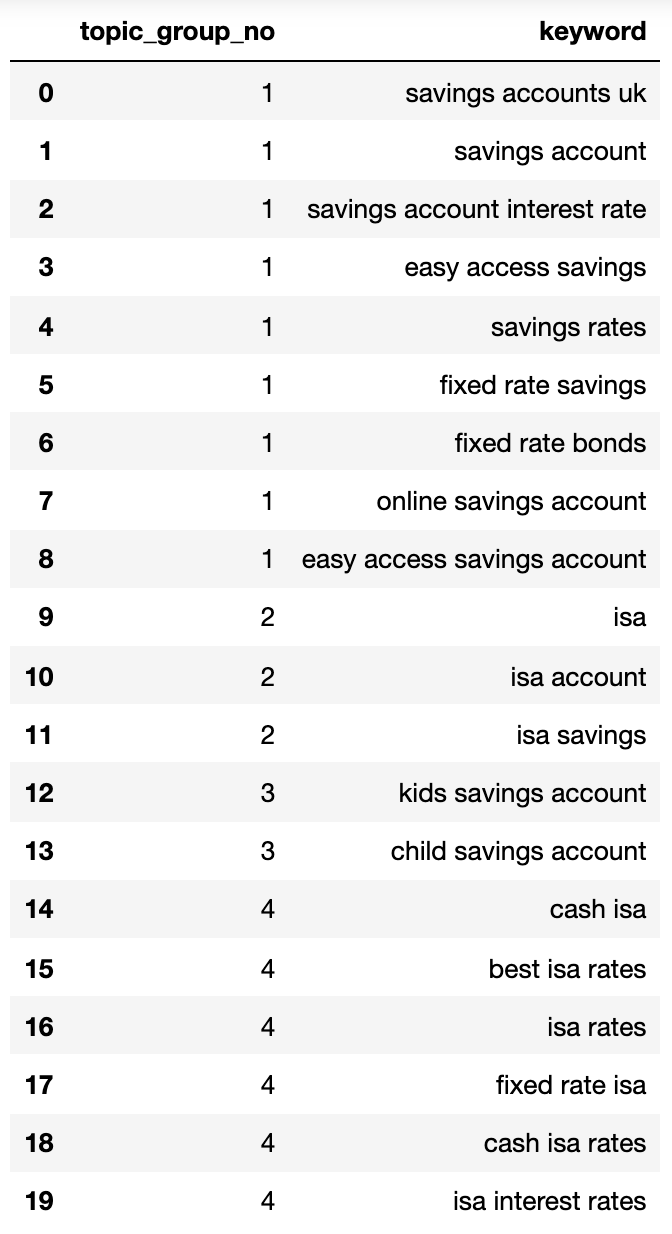

Below exhibits a dictionary containing all the key phrases clustered by search intent into numbered teams:

{1: ['fixed rate isa',

'isa rates',

'isa interest rates',

'best isa rates',

'cash isa',

'cash isa rates'],

2: ['child savings account', 'kids savings account'],

3: ['savings account',

'savings account interest rate',

'savings rates',

'fixed rate savings',

'easy access savings',

'fixed rate bonds',

'online savings account',

'easy access savings account',

'savings accounts uk'],

4: ['isa account', 'isa', 'isa savings']}

Let’s stick that right into a dataframe:

topic_groups_lst = []

for ok, l in topic_groups_numbered.objects():

for v in l:

topic_groups_lst.append([k, v])

topic_groups_dictdf = pd.DataBody(topic_groups_lst, columns=['topic_group_no', 'keyword'])

topic_groups_dictdf

The search intent teams above present approximation of the key phrases inside them, one thing that an web optimization professional would possible obtain.

Advertisement

Continue Reading Below

Although we solely used a small set of key phrases, the technique can clearly be scaled to 1000's (if no more).

Activating the Outputs to Make Your Search Better

Of course, the above might be taken additional utilizing neural networks processing the rating content material for extra correct clusters and cluster group naming, as some of the industrial merchandise on the market already do.

For now, with this output you possibly can:

- Incorporate this into your personal web optimization dashboard methods to make your tendencies and SEO reporting extra significant.

- Build higher paid search campaigns by structuring your Google Ads accounts by search intent for a better Quality Score.

- Merge redundant side ecommerce search URLs.

- Structure a buying website’s taxonomy in accordance to search intent as a substitute of a typical product catalog.

Advertisement

Continue Reading Below

I’m positive there are extra purposes that I haven’t talked about — be happy to touch upon any essential ones that I’ve not already talked about.

In any case, your web optimization key phrase analysis simply received that little bit extra scalable, correct, and faster!

More Resources:

Image Credits

Featured picture: Astibuag/Shutterstock.com

All screenshots taken by writer, July 2021

Advertisement

Continue Reading Below